A GPU-Accelerated Raspberry Pi Board

A journey from Kennedy's moon speech to local AI hardware

We Choose Local AI

Scientists, distinguished viewers, ladies and gentlemen, I am delighted to be here, and I am particularly delighted to be here on this occasion. We meet at a platform noted for knowledge, via a technology noted for progress, in a community noted for strength, and we stand in need of all three. For we meet in an hour of change and challenge, in a decade of hope and fear, in an age of both knowledge and ignorance.

The greater our knowledge increases, the greater our ignorance unfolds. No one can fully grasp how far and fast we have come, but condense, if you will, the fifty thousand years of humanity's recorded history in a time span of but a half century. Stated in these terms, we know very little about the first forty years, except that by the end of them, advanced humans had learned to count on their fingers.

The first electronic computer came only a month ago. Large language models emerged just two days ago, and now in pursuit of artificial intelligence, we will have created human-level reasoning before midnight tonight. This is a breathtaking pace, and such a pace cannot help but create new challenges as it dispels old ones—new ignorance, new problems, new dangers.

Surely the opening vistas of AI promise high costs and hardship as well as high reward. So it is not surprising that some would have us stay where we are a little longer, to rest, to wait. But this society was not built by those who waited and rested and wished to look behind them. This society was built by those who moved forward, and so will AI.

The exploration of AI will go ahead, whether we join in it or not, and it is one of the great adventures of all time. No one who expects to be looked up to can be expected to stay behind in the race for AI. Those who came before us rode the first waves of the industrial revolution, the first waves of modern invention, the first wave of nuclear power, and this generation does not intend to founder in the backwash of the coming age of artificial intelligence.

We mean to be part of it. We mean to lead it. For the eyes of the world now look into our digital reflection, our collective intelligence, and the superintelligence beyond. We have vowed that we shall not see it governed by a hostile flag of conquest, but by a banner of freedom and peace. We have vowed that we shall not see AI used for weapons of mass destruction, but as an instrument of knowledge and understanding.

Yet the vows of this community can only be fulfilled if we build open and local AI, and therefore we intend to build open and local AI. Our leadership in science and industry, our hopes for peace and security, our obligations to ourselves as well as others—all require us to make this effort, to solve these mysteries, to solve them for the good of all, and to become the world's leading AI community.

But why? Some say, local AI. Why choose this as our goal? And they may well ask, why climb the highest mountain? Why, 35 years ago, connect every computer on earth? Why does DeepSeek compete with OpenAI?

We choose to build local AI in this decade and do the other things, not because they are easy, but because we thought they would be easy.

From Vision to Reality: Building the Sentinel Core

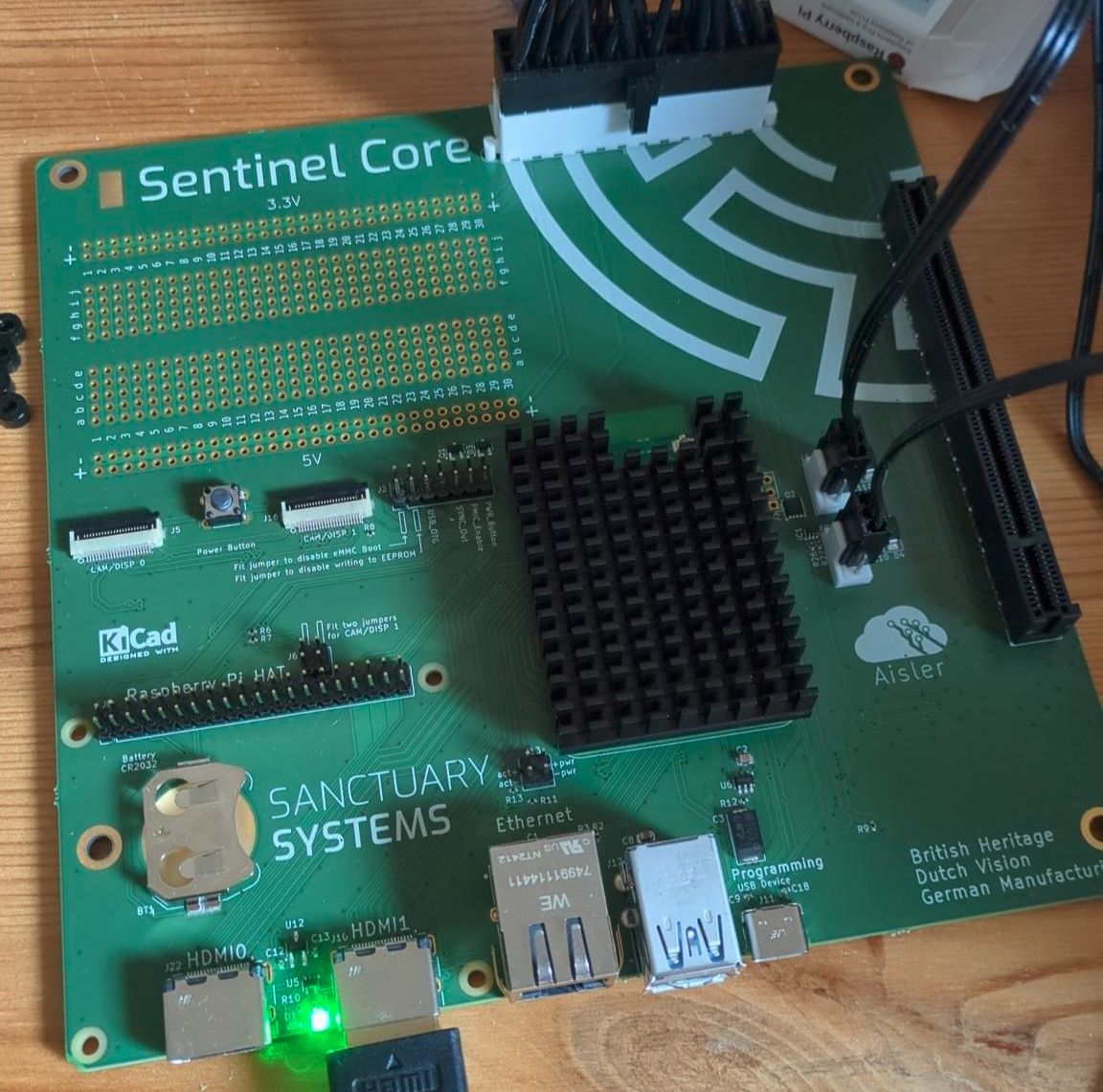

Hello, I'm Pepijn de Vos from Sanctuary Systems, and today I want to show you how I built a GPU-accelerated local AI voice assistant using a custom Raspberry Pi board.

The Inspiration

In a recent video, Jeff Geerling demonstrated how you can connect a Raspberry Pi to an external GPU and power supply through a rather convoluted setup. He showed how this configuration could encode videos, play games, and run large language models. Then, in his next video, he discussed the release of the new Compute Module 5.

This got me thinking: isn't there a Mini-ITX board where you can simply plug in a GPU and power supply instead of dealing with a complex external setup? I was particularly interested in upgrading my Home Assistant setup with a GPU to run large language models for a voice assistant.

I reached out to Jeff on Twitter asking if such a board existed. He replied that there were some partial solutions, but nothing quite right—and that he'd love to see it exist. My response? "Be right back."

The Plan

My initial plan seemed straightforward:

Take the official Compute Module I/O board

Resize it to Mini-ITX form factor

Replace the M.2 slot with a PCIe slot

Add an ATX power connector

Profit!

As it turned out, the execution was significantly more challenging than the concept.

The Challenges

I discovered that an open hardware project had already attempted something similar, but they had stopped at the planning stage—and that's where all the difficult work begins.

Beyond the arduous process of turning an idea into a real product, the main technical challenge lies in PCIe being an extremely fast protocol. You can't simply connect the wires together; you need to carefully tune trace lengths with precise spacing, or the system won't work. This is why PCBs have those distinctive parallel traces with small squiggles—they're length-matching patterns to ensure signal integrity.

After extensively checking the design and triple-checking every detail, I finally ordered a small batch of PCBs. While waiting for those to arrive, I also ordered a GPU, power supply, and case to assemble a complete system.

Testing and Assembly

When the PCBs arrived, I set up a test bench and began validation. Despite a few minor setbacks, the board worked on the first try—mostly. I started conservatively, testing with a network card before risking my new GPU. Once that worked successfully, I followed Jeff's instructions to compile a new kernel with the necessary AMD driver fixes.

With the GPU test successful, I was ready to assemble everything into the final case.

Build Process

Installing the Compute Module: The first step involves inserting the Raspberry Pi Compute Module 5 (8GB RAM, 32GB storage) into its socket—firmly but carefully. After securing it with washers, I applied the thermal interface material, attached the heatsink, and secured it with the provided screws.

Power Supply Installation: I chose the BeQuiet SFX Power 3 for this build. The case required removing the power supply bracket, mounting the PSU with the case screws, connecting the extension lead, and reinstalling the bracket with the power supply attached.

Motherboard Integration: With the four corner screws in place, I connected the fan header, front panel I/O, and ATX power connector to complete the motherboard installation.

GPU Installation: For the graphics card, I selected an AMD 7800 XT. After removing the rear faceplates (and unfortunately cutting myself in the process), I installed the GPU using a riser cable configuration. Pro tip: insert cables before screws for easier access—unlike what I did in my somewhat chaotic assembly footage.

Results

The finished system looks like a standard Mini-ITX build (minus the missing I/O plate), but when powered on, it boots into Raspberry Pi OS. Following Jeff's tutorial, I installed the necessary drivers, kernels, and llama.cpp.

The performance results were impressive: I achieved 12-14 tokens per second on a 14B model, which provides excellent responsiveness for local AI applications.

What's Next

I now have a fully functional Mini-ITX Compute Module 5 board with discrete graphics capability, perfect for running large language models locally. In upcoming content, I'll explore setting up Home Assistant integration, experimenting with different AI personalities, and other exciting applications.

The Sanctuary System Sentinel Core represents more than just a hardware achievement—it's a step toward the vision of accessible, local AI that puts powerful capabilities directly in users' hands, free from the constraints of cloud dependencies and corporate gatekeepers.

This project demonstrates that with determination, careful engineering, and perhaps a touch of Kennedy-inspired ambition, we can build the tools needed for a more open and accessible AI future.

More articles